Optical character recognition (OCR) is probably one of the oldest applications of machine learning.

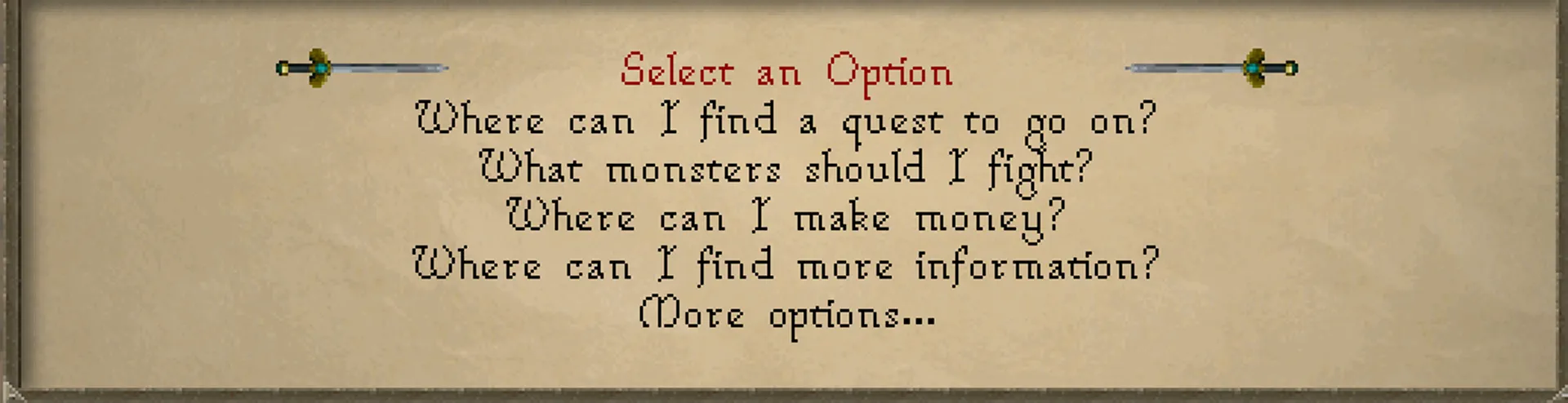

A simple experiment: fine-tuning an LSTM (long-short term memory) neural net to identify font in-game for the MMORPG OldSchool RuneScape. (Back in 2021, I trained an object recognition model for the game).

Before generative models, OpenAI focused on playing video games – starting with the OpenAI Gym and later Dota 2 bots. Why did I choose RuneScape? It's a fairly simple but deep game. It requires lots of clicking and optimization but doesn't require deep strategy. Most of all, the client is (mostly) open-sourced.

Generate training data. We're going to use synthetic training data. Tesseract has a utility that will generate images a corresponding character bounding boxes from text (text2image). While I could have used a variety of text corpora, I chose to use a bunch of text found in the game. I used the database in osrsbox-db. I found the fonts in RuneStar/fonts. I could have performed some data augmentation but chose not to – for this use case; the text is always printed uniformly (same spacing, orientation, etc.).

Training. There are generally three choices:

- Train a model from scratch – Since we have a large amount of training data, we can train a model from scratch. Tesseract's training scripts don't

- Fine-tune a model – Using the english Tesseract LSTM, fine-tune it to understand a new font or new characters.

- Train the top layers – Randomize and retrain the top layers of the network. Useful if you're teaching it a new language with completely new charactesrs (e.g., an elfish font).

I tried both fine-tuning and training a new model from scratch. I did this a few times, as I found that (1) training was quick on my machine 64GB RAM and (2) it was easy to overfit the model.

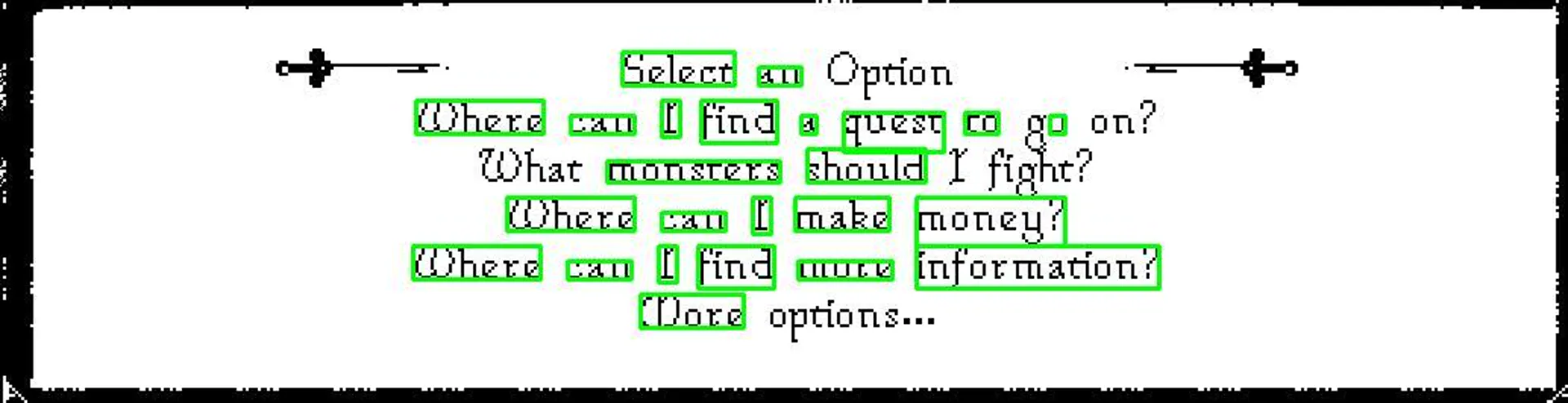

For inference, I took raw screenshots from the game and processed them minimally. This is probably where most optimization could come from – preparing the data so that it's optimal for the model: not too bold, not too thin, black text on white background, non-text artifacts removed.

A few observations about the process:

- Tesseract is a legacy OCR engine that's retroactively added neural network support. However, this means that training and inference are still done through the binary, not something more modern (like TensorFlow or PyTorch).

- Preparing the training data and preparing the data for inference take up the most time and are essential to good results. Much of this is done manually – thresholding, color segmentation, and other image manipulation techniques to separate text. More modern architectures probably just train different models for each of these steps.

- Tesseract finds it hard to identify pixelated fonts.

You can find the code (and the trained models) on my GitHub.