Large language models are especially good at generating new examples. This is used for everything from generating unit tests to generating few-shot examples. But what if you started to move past few-shot to full synthetic data sets? You get model arbitrage.

Alpaca 7B was trained for less than $600. It used OpenAI's model to expand a set of 175 human written instruction/output pairs and generate more than 52,000 instruction-following examples to train their model with. Alpaca is fine-tuned on LLaMA (from Meta), so the from-scratch cost isn't exactly $600, but the effective cost is magnitudes smaller when building on open-source models.

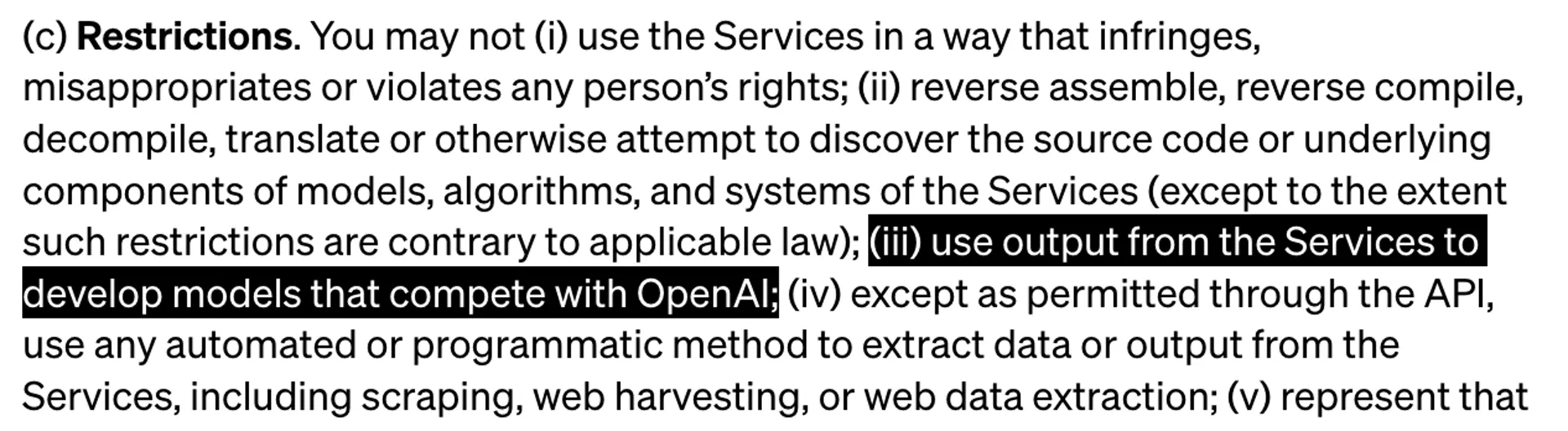

Sure, this is against OpenAI's terms and conditions (which is why Alpaca is "non-commercial"), but as more models become open-source, can you really stop this sort of model arbitrage?

Arbitrage will make the reasoning ability of foundational models converge. Any model that outperforms will simply be used to generate training data for others.