Even if LLMs don’t unlock new capabilities in programming, we are already seeing double-digit productivity increases for software developers — writing code faster (more effective code completion), debugging code faster (better than StackOverflow), and test case generation. This shifts the automation frontier.

The automation frontier answered the question: When does a task make sense to automate? (xkcd 1205, h/t swyx.io).

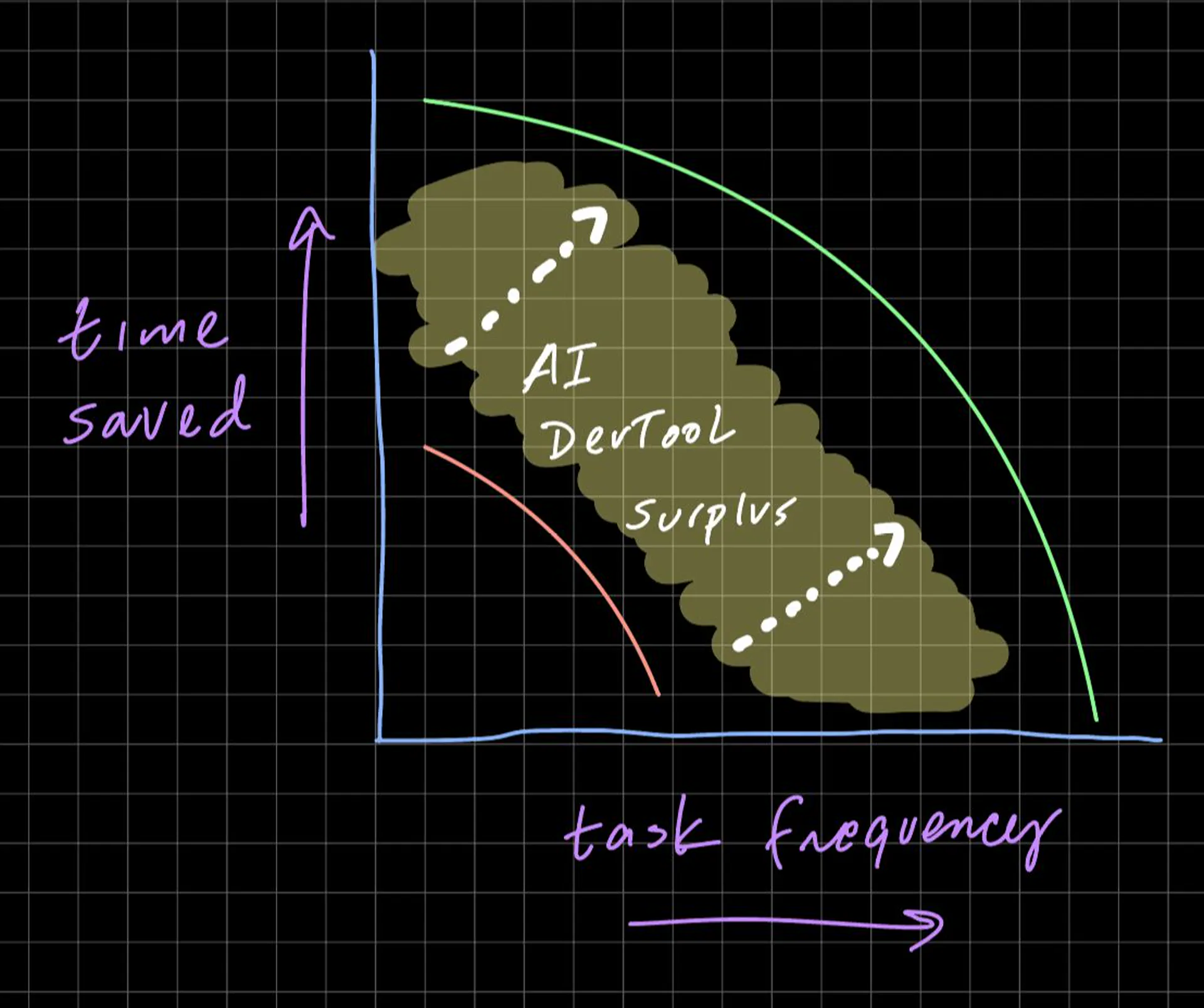

A simplified model has two variables:

- How much time can you save with automation?

- How often is the task performed?

The frontier is the curve that solves for `time saved * task frequency - time to automate > 0`. Anything to the right of the curve should be automated, and anything to the left should be done manually.

You can either spend time shaving a few seconds off of extremely frequent tasks or a more significant time off of infrequent tasks. LLMs and the downstream AI-augmented developer tools shift the frontier right by lowering the time to automate. This means more automated tasks. And the area in between these curves is large.

Of course, this is a simplified model of the world — there’s probably even more surplus to be gained with automation (are there network effects to automating tasks? Better uses of the newly gained time?)